NVIDIA HGX A100 Systems Supercharged with A100 80G PCIe, NDR 400G InfiniBand and Magnum IO to Accelerate AI and HPC

NVIDIA has added NVIDIA A100 80GB PCIe GPU, NVIDIA NDR 400G InfiniBand networking, and NVIDIA Magnum IO GPUDirect Storage software to its HGX AI supercomputing platform to provide the extreme performance to enable industrial HPC innovation. The HGX platform is being used by high-tech industrial pioneer General Electric, applying HPC innovation to computational fluid dynamics simulations that guide design innovation in large gas turbines and jet engines. The A100 Tensor Core GPUs deliver unprecedented HPC acceleration to solve complex AI, data analytics, model training and simulation challenges. HPC systems that require unparalleled data throughout are supercharged by NVIDIA InfiniBand and Magnum IO GPUDirect Storage enables direct memory access between GPU memory and storage.

NVIDIA A100 80GB PCIe Performance Enhancements for AI and HPC

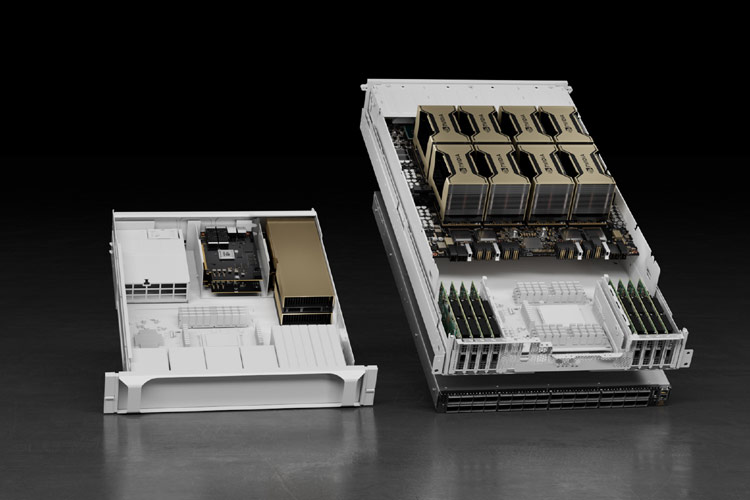

A100 80GB PCIe GPUs increase GPU memory bandwidth 25 percent and provide 80GB of HBM2e high-bandwidth memory. Its enormous memory capacity and high-memory bandwidth allow more data and larger neural networks to be held in memory, minimizing internode communication and energy consumption. Combined with faster memory bandwidth, it enables researchers to achieve higher throughput and faster results, maximizing the value of their IT investments. A100 80GB PCIe is powered by the NVIDIA Ampere architecture, which features Multi-Instance GPU (MIG) technology to deliver acceleration for smaller workloads such as AI inference. MIG allows HPC systems to scale compute and memory down with guaranteed quality of service. In addition to PCIe, there are four- and eight-way NVIDIA HGX A100 configurations.

Next-Generation NDR 400Gb/s InfiniBand Switch Systems

HPC systems that require unparalleled data throughout are supercharged by NVIDIA InfiniBand. The NVIDIA Quantum-2 fixed-configuration switch systems deliver 64 ports of NDR 400Gb/s InfiniBand per port (or 128 ports of NDR200), providing 3x higher port density versus HDR InfiniBand. The NVIDIA Quantum-2 modular switches provide scalable port configurations up to 2,048 ports of NDR 400Gb/s InfiniBand (or 4,096 ports of NDR200) with a total bidirectional throughput of 1.64 petabits per second — 5x over the previous-generation. The 2,048-port switch provides 6.5x greater scalability over the previous generation, with the ability to connect more than a million nodes with just three hops using a DragonFly+ network topology.

Magnum IO GPUDirect Storage Software

The Magnum IO GPUDirect Storage provides a direct path that enables applications to benefit from lower I/O latency and use the full bandwidth of the network adapters while decreasing the utilization load on the CPU and managing the impact of increased data consumption.